Silent Compiler Bug De-duplication via Three-Dimensional Analysis

Chen Yang, Junjie Chen, Xingyu Fan, Jiajun Jiang, Jun Sun

- Read: 03 Aug 2025

- Published: 13 Jul 2003

ISSTA 2023: Proceedings of the 32nd ACM SIGSOFT International Symposium on Software Testing and Analysis Pages 677 - 689

https://dl.acm.org/doi/10.1145/3597926.3598087

Q&A (link)

What are the motivations for this work?

- See Abstract, Introduction, Section 2.

- Evaluating the quality of compilers is critical.

- Bug duplication problem: Many test failures are caused by the same compiler bug.

- Silent compiler bugs make the bug duplication problem worse due to the lack of crash feedback.

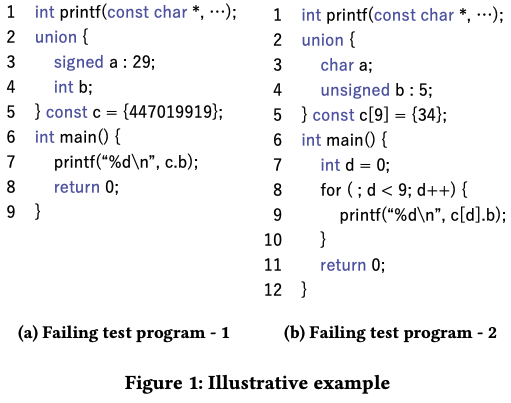

- The two bug-triggering test programs shown below are corresponding to the same bug in GCC-4.4.0, and they both produce inconsistent outputs under the optimization levels

-O0and-O1. The test programs in the example give significantly different compiler coverage, which invalidates the code coverage approach by prior works.

What is the proposed solution?

- See Abstract, Section 3.

- Characterize the silent bugs (b) from the testing process and identify 3D information:

- test program p

- optimizations o

- test execution c

- Systematically conduct causal analysis to identify bug-causal features from each of the 3D for more accurate bug de-duplication (the authors argue that only a small portion of information in p, o, and c is bug-causal).

- Rank the test failures that are more likely to be caused by different silent bugs higher by measuring the distance among test failures based on the 3D bug-causal features.

- For test program p: Use test program reduction tool (C-Reduce) to reduce each bug-triggering test program. -> Mutate test program until passing, take minor differences between fail/success as bug-causal features.

- Mutation is done by implementing mutation rules. Four categories of them: identifier-level mutation, operator-level mutation, delimiter-level mutation, statement-level mutation.

- Difference between fail/success is extracted at the AST level, which can more comprehensively represent bug-causal features.

- AST operation is sorted by frequency and then extracted as vector. D3 takes the distace between test failures.

- For optimization o: Use delta debugging to identify the minimal bug-triggering optimizations (binary search).

- For test execution c: Collect function coverage achieved by the bug-triggering test program as the original information in this dimension (similar to the existing work). Then, it identifies the bug-causal functions from all the covered functions by estimating their suspicious scores via the idea of spectrum-based bug localization, and finally treats highly suspicious functions as the bug-causal features in this dimension.

- Finally, the test failure prioritization is done by calculating the distance extracted from the three dimensions, and ranks them by the furthest-point-first (FPF) algorithm.

What is the work’s evaluation of the proposed solution?

- See Section 4.

- Used two datasets: the dataset released by the existing study on compiler bug de-duplication (consists of 1,275 test failures caused by 35 unique silent bugs for GCC-4.3.0) and the dataset released by the existing studies on testing compilers (contains 647 test failures caused by 20 unique silent bugs in GCC-4.4.0, 26 test failures caused by 7 unique silent bugs in GCC-4.5.0, and 116 test failures caused by 6 unique silent bugs in LLVM-2.8, respectively).

- In total, used four datasets from four versions of two popular C compilers (i.e., GCC and LLVM), including 2,024 test failures caused by 62 unique silent bugs.

- Used RAUC-n as the metric. it transforms the prioritization result produced by a technique to a plot, where the x-axis represents the number of test failures and the y-axis repre- sents the number of corresponding unique bugs. Then, it calculates the ratio of the area under the curve for a technique to that for the ideal prioritization. Larger RAUC values mean more unique bugs that developers can identify when investigating the same number of test failures, indicating better de-duplication effectiveness.

What is your analysis of the identified problem, idea and evaluation?

- The idea of measuring distance shall not be an unusal approach. The subject (compiler test) seems to be the real innovation.

What are the contributions?

- See Introduction.

- D3. A novel technique for addressing the de-duplication problem on silent compiler bugs.

What are future directions for this research?

- See Section 5.

- Overhead issue: The average time spent on each test program is 45.64s, while by Tamer and Transformer (two prior works) is 1.36s and 25.57s.

What questions are you left with?

NONE

What is your take-away message from this paper?

NONE

Written on